Klokov R, Lempitsky V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models[C]//2017 IEEE International Conference on Computer Vision (ICCV). IEEE, 2017: 863-872.

1. Overview

1.1. Motivation

- rasterize 3D models onto uniform voxel grids lead to large memory footprint and slow process time

- there exist a large number of indexing strucutres (kd-tree, oc-trees, binary spatial partition tree, R-trees and constructive solid geometry)

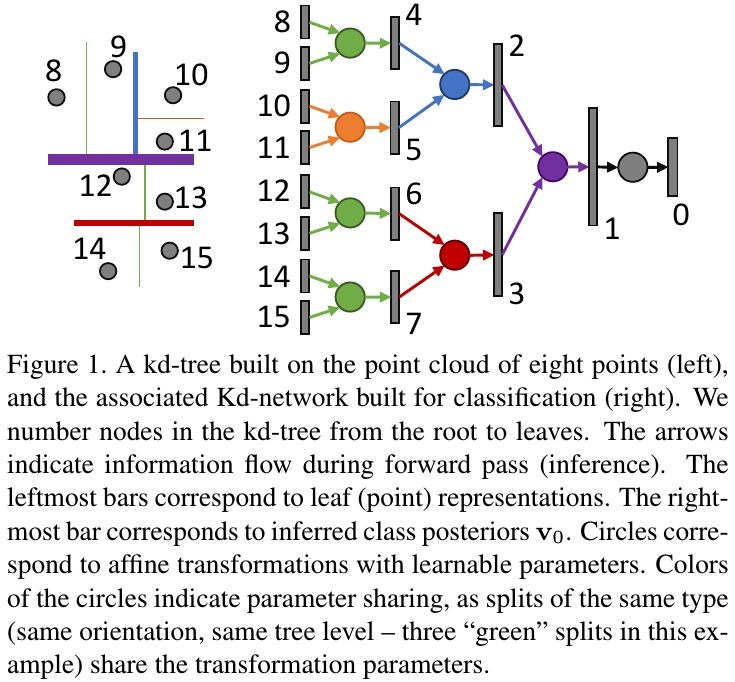

In this paper, it proposed Kd-Networks

- divide the point cloud to construct the kd-tree

- perform multiplicative transformation and share parameters of these transformation (mimic ConvNet)

- not rely on grids and avoid poor scaling behavior

1.2. Related Works

- 3D Conv (+ GAN)

- 2D Conv (2D projection of 3D obj)

- spectral Conv

- PointNet

- RNN

- OctNet (Oct-Trees)

- Graph-based ConvNet

1.3. Dataset

- classification. ModelNet10, ModelNet40

- shape retrieval. SHREC’16

- shape part segmentation. ShapeNet part dataset

2. Network

2.1. Input

Recur to divide the point clouds into two equally-sized subsets. get N - 1 nodes and each divide direction d_i (along x, y or z).

- N. the fixed size of point cloud (sub-sample or over-sample)

- d_i. divide direction of each level

- l_i. the level of tree

- c_1(i) = 2i, c_2(i) = 2i + 1. children of ith node

2.2. Processing Data with Kd-Net

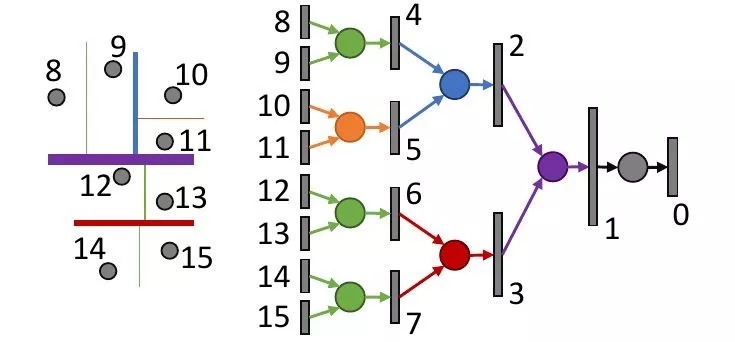

Given a kd-tree, compute the representation v_i of each node. In the ith level, apply the sharing layer to the same divide direction node.

- v_i. the representation of ith node

- φ. Relu

- []. concate

- W, b. parameters of the layer in ith level, d_i direction (dimension: 2m_{l+1} x m_l, m_l)

2.3. Classification

2.4. Shape Retrieval

- output a descriptor vector (remove trained classifier of Classification)

- histogram loss. also can use Siamese loss or triplet loss

2.5. Segmentation

- mimic encoder-decoder (Hourglass)

- skip connection

2.6. Properties

- Layerwise Parameter Sharing

- CNN. share kernels for each localized multiplication

- Kd-Net. share kernel (1x1) for points with same split direction in same level

- Hierarchical Representation

- Partial Invariance to Jitter

- split direction

- Non-invariance to Rotation

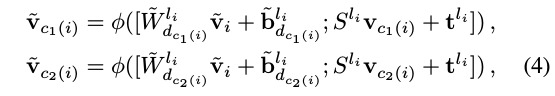

- Role of kd-tree Structure

- Kd-tree determine the the combination order of leaf representation

- Kd-tree can be regarded as a shape descriptor

2.7. Details

- normalize 3D coordinates. [-1, 1]^3 and put the origin at centroid

- data augmentation. perturbing geometric transformation, inject randomness into kd-tree construction (direction probability)

- γ=10.

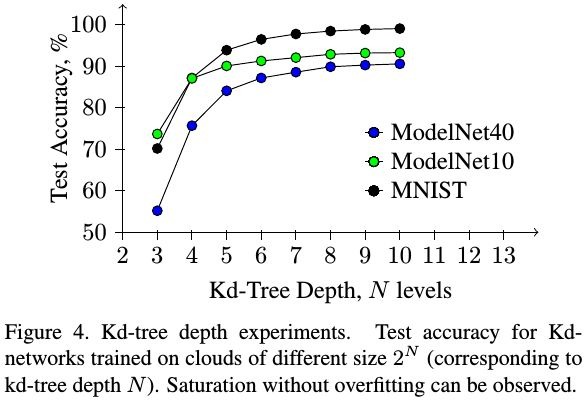

3. Experiments

3.1. Details

- MNIST→2D Point Cloud. point of the pixel center

- 3D Point Cloud. sample faces→ sample point from face

- Self-ensemble in test time

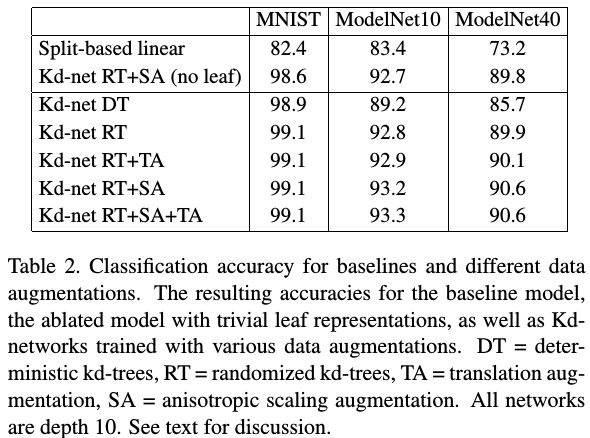

- Augmentation

- TR. translation long axis ±0.1

- AS. anisotropic rescaling

- DT. deterministic tree

- RT. randomized tree

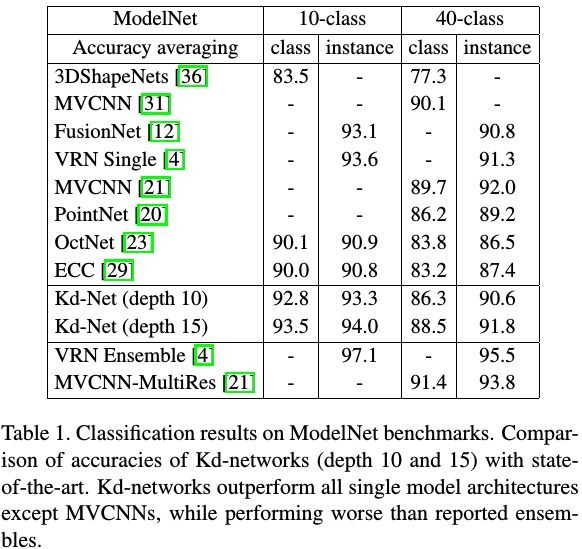

3.2. Classification

3.3. Ablation

3.4. Shape Retrieval

- 20 rotations→pooling→FC

3.5. Part Segmentation

- duplicated random sample with an addition of a small noise. help with rare calss

- during test, predict on upsampled cloud and then obtain the mapping of original points

- low memory footprint < 120 MB